Science & Tech

Becca Monaghan

Mar 25, 2023

Donald Trump v Stormy Daniels: The story so far

content.jwplatform.com

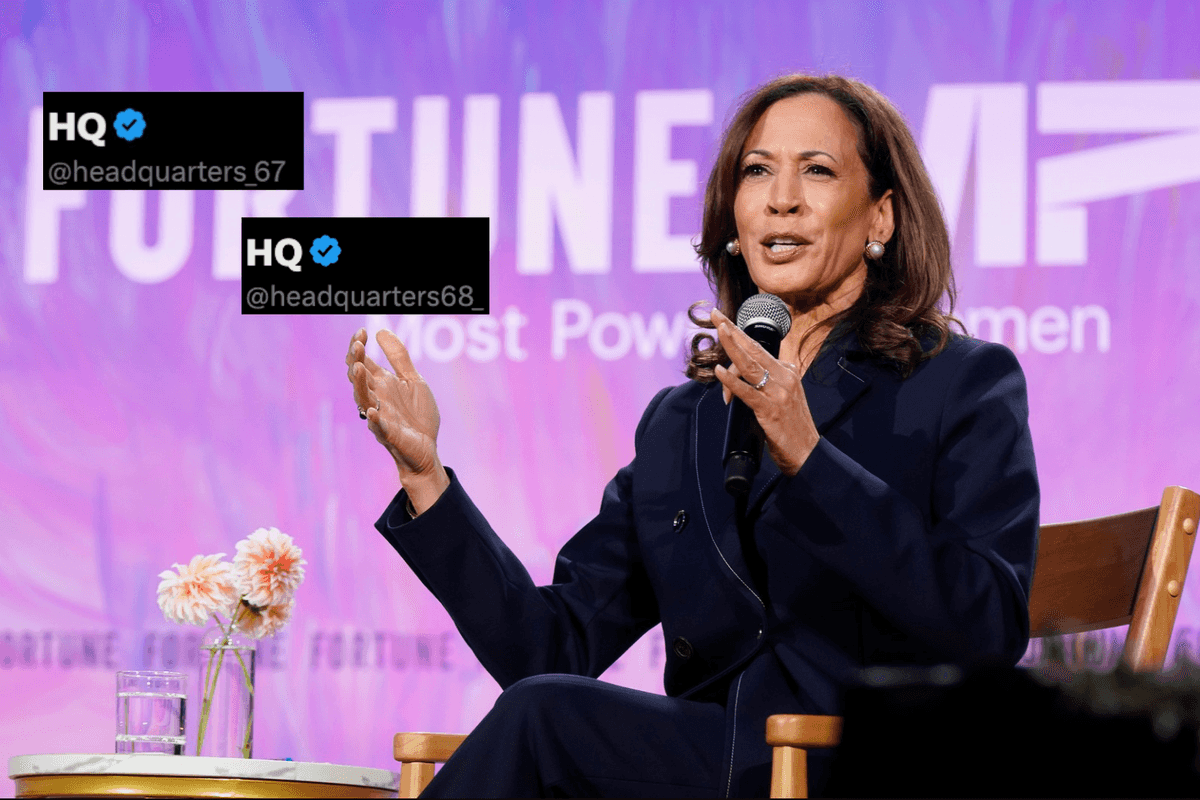

Earlier this week, worryingly realistic deepfake images of Donald Trump's "arrest" went viral online.

The former president believed he was being arrested on Tuesday 21 March amid an investigation into Stormy Daniels’s allegation she was paid "hush money" by the Republican.

Taking to his Truth Social platform, Trump wrote: "Now illegal leaks from a corrupt and highly political Manhattan district attorney’s office, which has allowed new records to be set in violent crime … indicate that, with no crime being able to be proven, and based on an old and fully debunked (by numerous other prosecutors) fairytale, the far and away leading Republican candidate and former president of the United States of America, will be arrested on Tuesday of next week."

He added: "Protest, take our nation back!"

As we now know, Trump was not indicted on Tuesday.

Instead, Twitter user Eliot Higgins was curious to see what the hypothetical scenario would look like.

"Making pictures of Trump getting arrested while waiting for Trump's arrest," he tweeted, along with two cartoon-like images of Trump being taken down by police.

Sign up for our free Indy100 weekly newsletter

While Eliot's AI images were impressively realistic, some people insisted that such images should include watermarks that "disclose they are AI-generated and not real."

Cybersecurity expert and host of What the Hack, Adam Levin told Indy100 that sharing such deceiving pictures can result in "extremely dangerous" consequences.

"As we’ve seen with other forms of misinformation and disinformation online, the consequences of deepfakes can range from the relatively harmless to the extremely dangerous," Levin explained. "Deepfakes can cause wild swings in financial markets, they can be convincing enough to make an innocent person appear guilty of a crime, or ruin someone’s reputation."

He went on to suggest how they can be used as a part of a "larger cyber war tactic."

"Imagine a deepfake featuring president Biden announcing a nuclear strike on Russia, followed immediately by a full spectrum assault on the grid. That would foment panic," he said.

"The technology and technical know-how to generate a deepfake is minimal and the potential for damage is vast."

As for whether there are any repercussions for creating or sharing deepfakes, Levin noted the difficulties of tracing content back to the person.

"The legal system hasn’t really caught up to the technology," he explained. "The legality and ethics of deepfakes are extremely murky, especially when using the likeness of a public figure."

Some deepfake images and videos have subtle giveaways that they're not genuine.

Levin shared that they often look "asymmetrical or have strange features like extra fingers," as deepfakes are "bad at hands."

He also urged people to look out for "blurriness, differently coloured eyes or mismatched ears."

Levin added: "Videos may have poorly-synced audio, graininess or jagged motion. Deepfake-generated audio clips can be harder to identify, but are often flat in tone with very little fluctuation in volume, pitch or speed."

Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

Top 100

The Conversation (0)