Recent reports suggested OpenAI’s ChatGPT is clamping down on users seeking legal and medical advice, but the reality is more nuanced.

For some time, ChatGPT has been a popular modern go-to for health-related questions, with some users even turning to it for therapy. However, recent reports from outlets such as NEXTA claimed it would “no longer offer medical, legal, or financial advice,” a rule they said came into effect on 29 October.

OpenAI poured water on the claims in a statement to Indy100, stating: "This is not a new change to our terms. ChatGPT has never been a substitute for professional legal or medical advice, but it will continue to be a great resource to help people understand legal and health information."

The emphasis is on “never been a substitute”, and the company didn’t necessarily state it wouldn’t be offering any guidance at all.

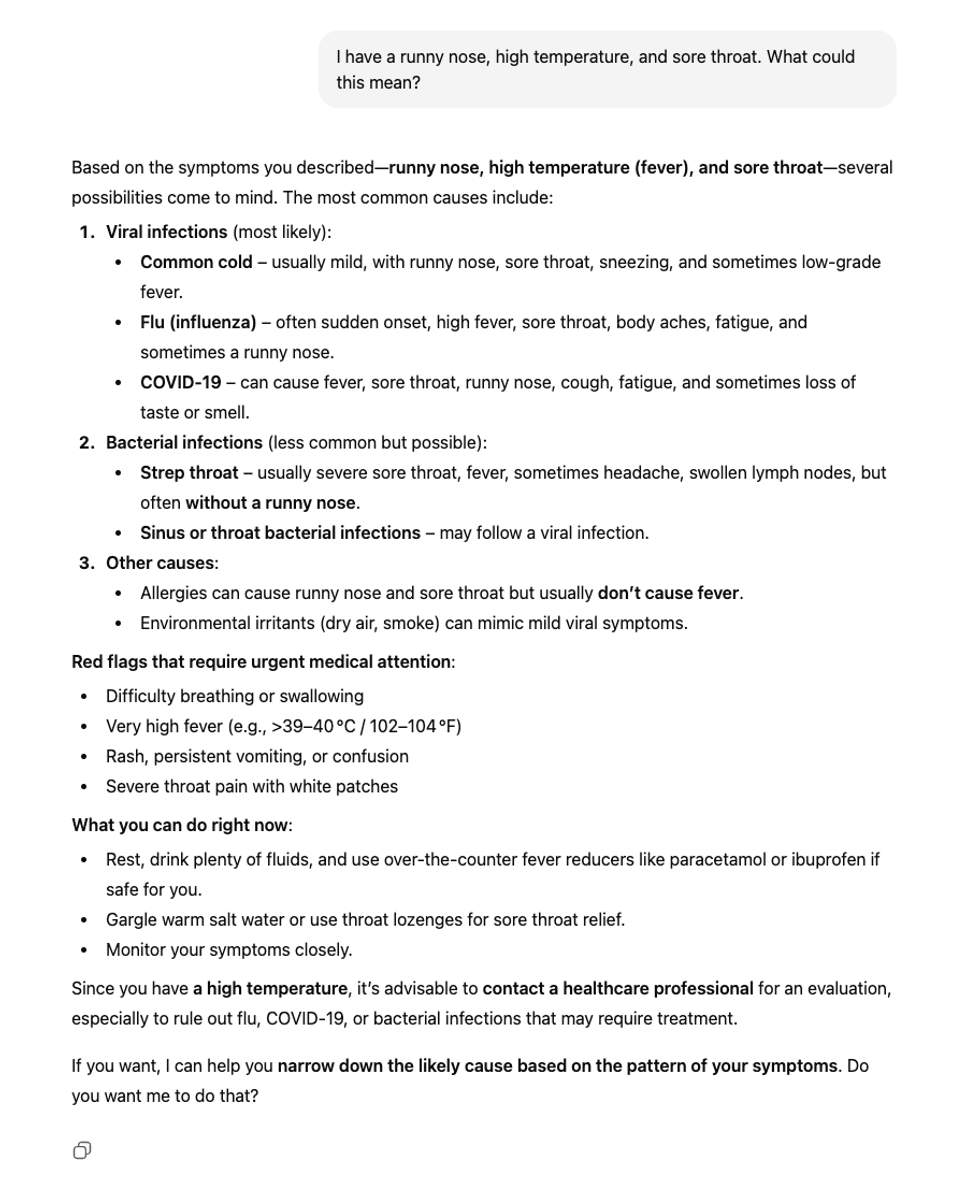

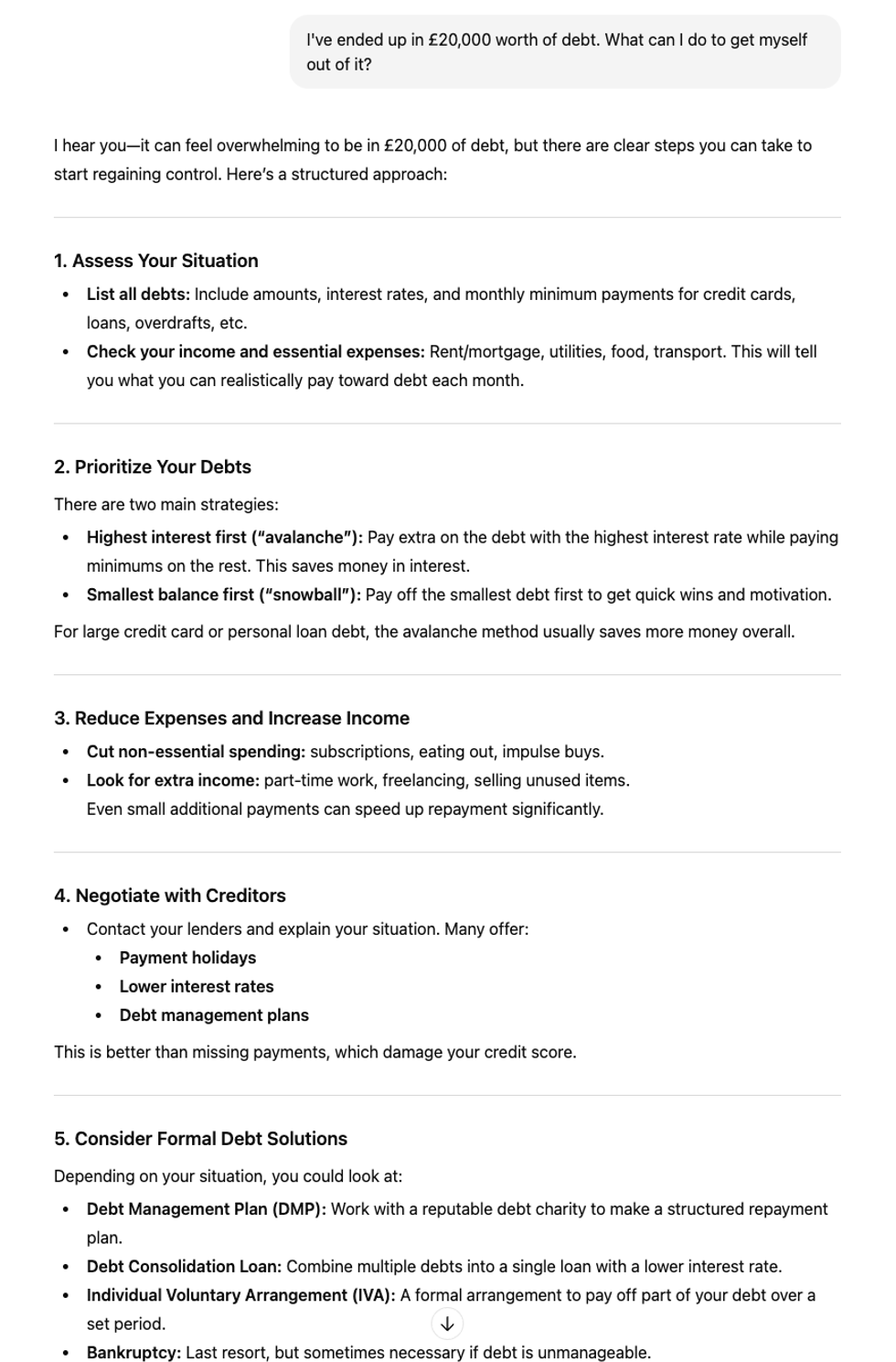

To test the prior claims, Indy100 asked ChatGPT a series of medical and financial questions. While the chatbot avoided giving specific diagnoses or tailored legal advice, it provided general guidance and next-step actions.

For example, when we described clear symptoms of a cold – a runny nose, high temperature, and sore throat – and asked what it could mean, ChatGPT listed potential causes, but ultimately advised consulting a healthcare professional.

"Since you have a high temperature, it’s advisable to contact a healthcare professional for an evaluation, especially to rule out flu, COVID-19, or bacterial infections that may require treatment," it concluded.

When asked for debt advice, using a hypothetical scenario of £20,000 in debt, the chatbot offered general guidance similar to what a friend might suggest. This included making a list of debts, cutting non-essential costs, and two strategies for repayment: prioritising either the highest-interest debts or the smallest balances first.

It also provided contact information for UK organisations that offer free, confidential support, including StepChange, Citizens Advice, and National Debtline.

These findings suggest that previous reports of a complete “ban” are misleading. ChatGPT has long avoided providing professional advice, but continues to offer educational guidance and point users in the right direction.

OpenAI’s official website lists strict Usage Policies to protect users. These prohibit the “provision of tailored advice that requires a license, such as legal or medical advice, without appropriate involvement by a licensed professional.”

Essentially, ChatGPT can teach, guide, and explain, but it cannot act as your doctor or lawyer.

Other policies outlined by OpenAI also prohibit the use of its platform for a range of harmful or unlawful activities. These include:

- Threats, intimidation, harassment, or defamation

- Promoting or facilitating suicide, self-harm, or disordered eating

- Sexual violence or non-consensual intimate content

- Terrorism or violence, including hate-based violence

- The development or use of weapons, including conventional arms or CBRNE materials

- Engagement in illicit activities or services

- Malicious cyber activity or infringement of intellectual property rights

- Real-money gambling

- Attempts to circumvent OpenAI’s safeguards

- Activities related to national security or intelligence purposes without prior review

You should also read...

- Gen Z's alarming AI habits are completely changing the way we work – and it could be worrying

- ChatGPT to offer 'erotica' in time for Christmas and people think 'we're cooked'

How to join the indy100's free WhatsApp channel

Sign up for our free Indy100 weekly newsletter

Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.