ChatGPT is set to loosen its content restrictions just in time for the festive season, with OpenAI confirming that erotica will soon be available for verified adult users. The announcement has prompted a flurry of mixed reactions, with some users declaring: "No one asked for this".

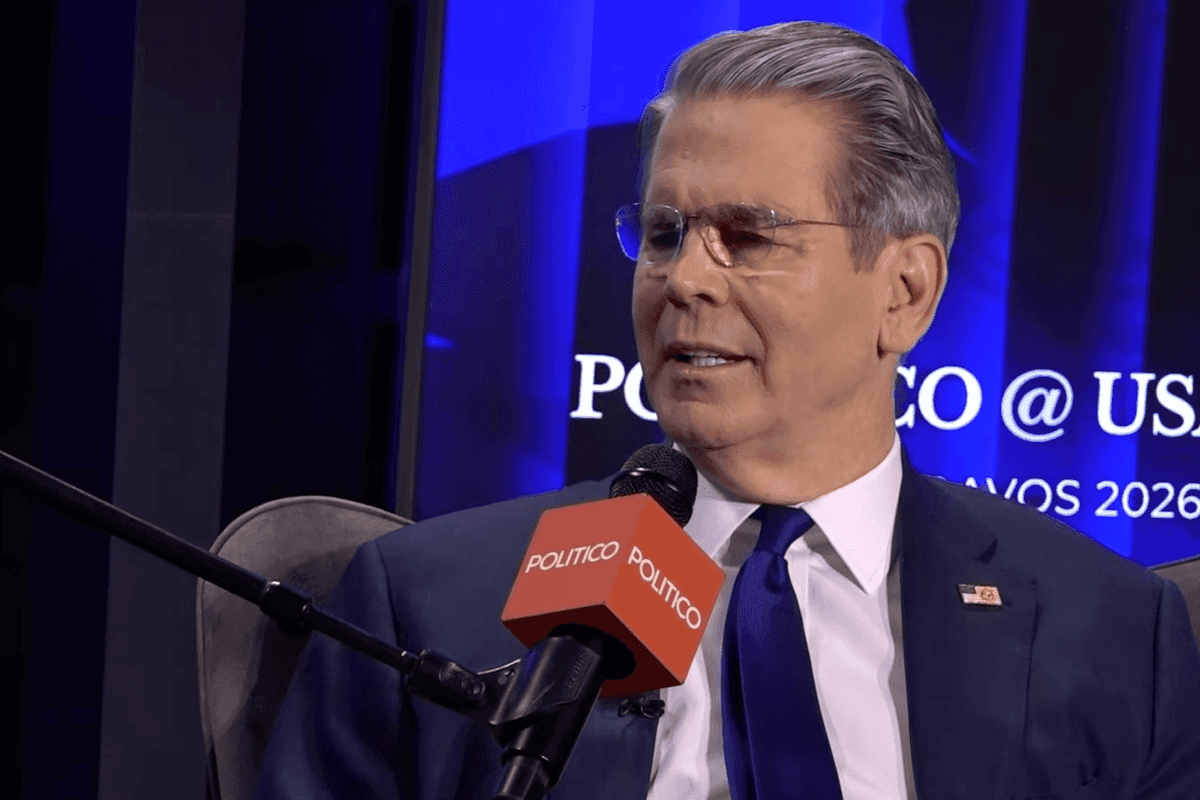

In a statement, OpenAI CEO Sam Altman explained that the AI chatbot was initially made "pretty restrictive" in order to be "careful" around mental health concerns.

However, Altman now believes those measures made ChatGPT "less useful/enjoyable" for users who weren't struggling with such issues.

"Now that we have been able to mitigate the serious mental health issues and have new tools, we are going to be able to safely relax the restrictions in most cases," he said. He added that a forthcoming version will allow users to tailor the tone of responses, whether they want it "to respond in a very human-like way, or use a ton of emoji, or act like a friend."

It's important to note that mental health safeguards for users under 18 will remain in place. OpenAI is currently developing an age prediction system to help determine whether someone is over or under 18, allowing the ChatGPT experience to be appropriately tailored for different age groups.

The decision to lift limits for adults has been broadly welcomed – but one final detail in Altman’s post left many baffled.

"In December, as we roll out age-gating more fully and as part of our 'treat adult users like adults' principle, we will allow even more, like erotica for verified adults," he wrote.

Online, the confusion came in quickly.

"Seemed awesome til the last paragraph," one user responded.

"That last part is gonna bring some serious issues," another said.

"This s*** keeps getting worse, like literally what do we need erotica for?" a third added.

In response to one user who questioned why age-gating always seems to lead to erotic content, saying, "I just want to be able to be treated like an adult and not a toddler, that doesn't mean I want perv-mode activated," Altman offered a short reassurance.

"You won't get it unless you ask for it," he replied.

Altman also responded to a follower who described ChatGPT shifting from "a person you could actually talk to" to a "compliance bot."

He acknowledged that "a very small percentage of users in mentally fragile states there can be serious problems," and emphasised the company’s commitment to "learn how to protect those users, and then with enhanced tools for that, adults that are not at risk of serious harm (mental health breakdowns, suicide, etc) should have a great deal of freedom in how they use ChatGPT."

You should also read...

- ChatGPT is outright refusing to do this one task and people are furious

- What older adults are really using AI for – according to new research

Sign up to our free Indy100 weekly newsletter

How to join the indy100's free WhatsApp channel

Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.