News

Harriet Marsden

Nov 17, 2016

Founder and CEO of Facebook Mark Zuckerberg delivers his keynote conference on the opening day of the World Mobile Congress at the Fira Gran Via Complex on February 22, 2016 in Barcelona, Spain

Getty

In our 'post-truth' world (official word of the year), Facebook has been condemned for supposedly facilitating the spread of fake news and propaganda.

Many critics of the social media platform claim that the 'fake news' problem actually swayed people's votes during the US elections, resulting in a victory for Donald Trump.

Mark Zuckerberg strenuously rejected these assertions, claiming that only a "very small" amount of content was fake news, and that both Clinton and Trump were targeted.

Zuckerberg also claims that 99 per cent of news shared on its platform is verifiable.

However, considering the staggering amount of content shared on a daily basis, that 1 per cent equals a lot of fake news.

Facebook staff have reportedly formed a "secret task force" to examine the company's role in perpetuating false information, but have also claimed that their complex, secretive algorithms are responsible, and therefore that this is issue is technically difficult to resolve.

Enter the prodigies, to shame their technological forefathers.

In a move that seems somewhat reminiscent of early-years Zuckerberg himself, four college students cracked Facebook's algorithm conundrum - in just 36 hours.

During a Princeton University hackathon (partly sponsored by Facebook) the students developed a project entitled 'FiB: Stop living a lie.'

Essentially, it's a Chrome browser extension that goes through your feed as you browse and verifies in real time. If a post is found to be false, the plug-in attempts to locate the truth.

The plug-in actually managed to function as a legitimate news authenticity check, while also differentiating between opinion and fact.

Meet the team:

Nabanita De, second-year Master's student in computer science, University of Massachusetts,

Anant Goel, freshman, Purdue

Mark Craft, sophomore, University of Illinois at Urbana-Champaign

Qinglin Chen, sophomore, University of Illinois at Urbana-Champaign

Their mission statement (according to their project report):

In the current media landscape, control over distribution has become almost as important as the actual creation of content, and that has given Facebook a huge amount of power.

The impact that Facebook newsfeed has in the formation of opinions in the real world is so huge that it potentially affected the 2016 election decisions, however these newsfeed were not completely accurate.

Our solution? FiB because With 1.5 Billion Users, Every Single Tweak in an Algorithm Can Make a Change, and we dont stop at just one.

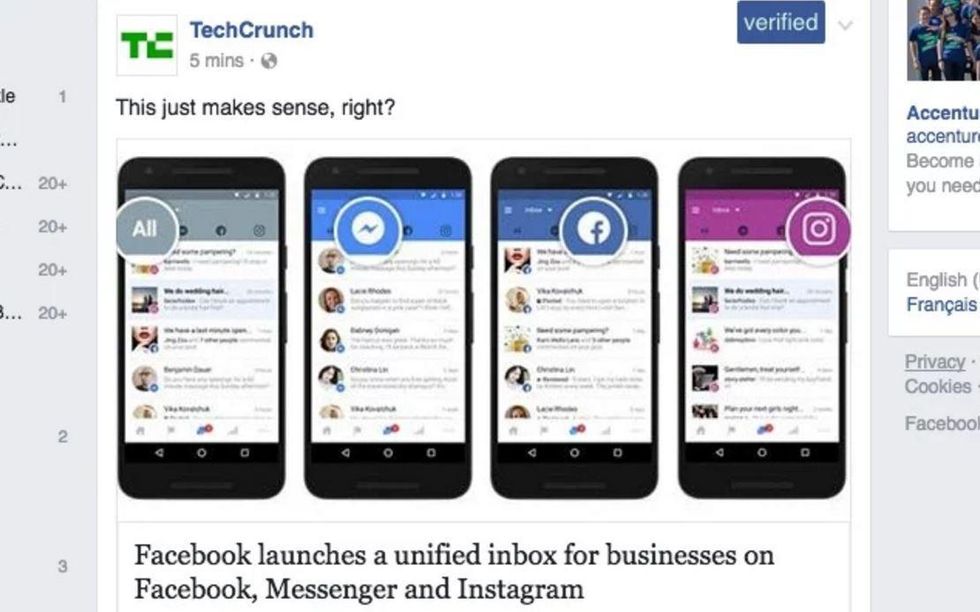

How their browser plug-in works

As told by De to Business Insider:

Using artificial intelligence, it classifies every post (pictures, links, malware, fake news) as verified or non-verified

It considers the reputation of the website, and compares it against malware and phishing website databases

It searches the post and retrieves searches with high confidence, summarising the findings for the user

With Twitter pictures, it converts images to text, and uses the username mentioned, to see if the current tweet was ever previously posted by the user

It adds a tag to say whether the story has been verified

The students have released the plug-in as an open-source project, so now anybody can install or modify it.

Obviously, simply verifiying whether a story is true or not cannot stop the propagation of misinformation, the spread of lies or propaganda.

In the current climate, it's likely that these sorts of verifications could either be ignored, or accused of falseness / bias in themselves.

Nevertheless, this is an extremely promising start.

And the most impressive thing? All four were new to Javascript, Python, flask servers and AI services.

So not only did they crack the problem in 36 hours, but they learnt all the necessary technology in that time, too.

Take a bow.

More: No, the viral image of 2016 election results and 2013 crime rates is not real

More: Here's a list of 133 websites that publish 'fake' news

Top 100

The Conversation (0)