Science & Tech

Joe Vesey-Byrne

Sep 10, 2017

Picture:

iStock/Getty Images

LGBT+ groups have slammed a study from Standard School of Business which claims that computers can predict a person's sexuality based on their expression, better than a human.

The study, due to be published in the Journal of Personality and Social Psychology claimed that 'deep neural networks are more accurate than humans at detecting sexual orientation from facial images'.

It was first reported on in The Economist, and has caused controversy among LGBT+ activists.

Critics such as Glaad and the HRC have warned that the study does not show an AI correctly identifying homosexuals based on images, and they claimed that reporting it as such is 'false'.

The authors of the study, third year Stanford student Yilun Wang and assistant professor Michal Kosinski, warned that it had implications for privacy.

The study

The Face++ program employed by Wang and Kosinski used 35,326 facial images from an unspecified American dating website.

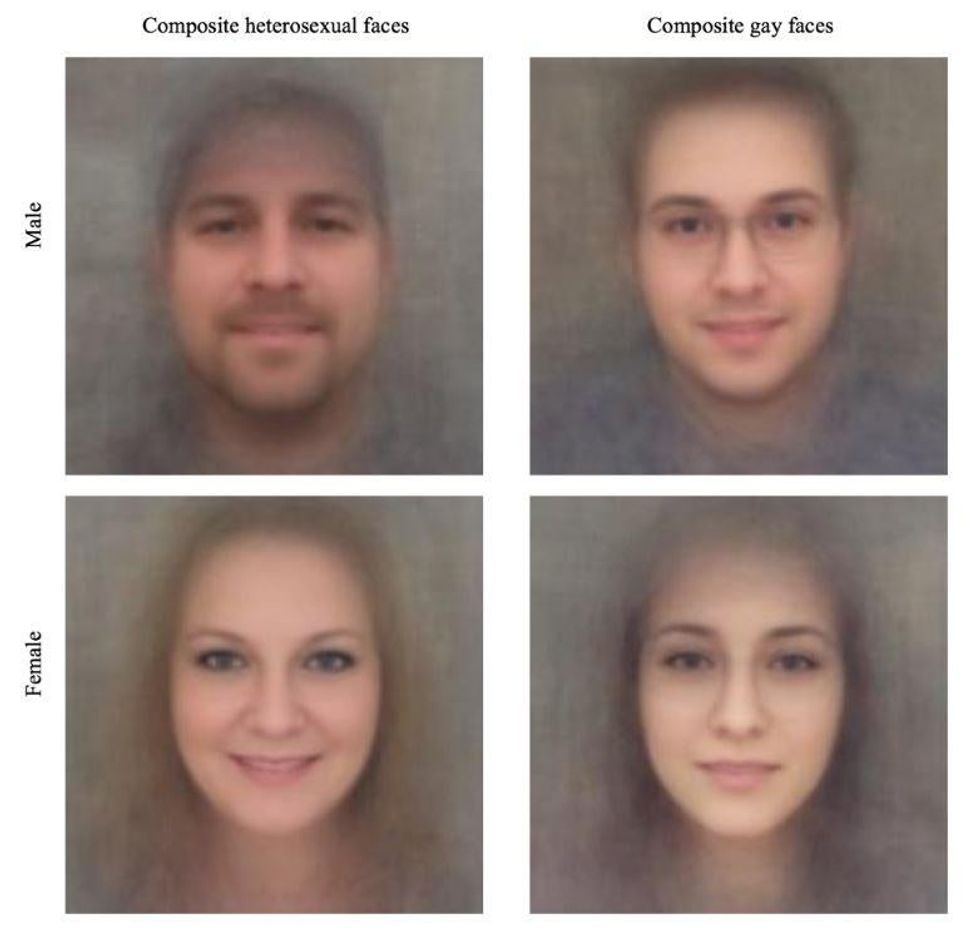

The algorithm used a predictive model called 'logistic regression' to find correlations between the facial features of the participants and their sexuality.

Conclusions drawn about each person were compared to the orientation listed on their dating profile - as determined by what gender they were seeking to meet.

The algorithm could tell the difference between gay and straight men 81 per cent of time, and between gay and straight women 71 per cent of time when shown a single image.

By contrast, humans were correct 61 per cent of the time for men, and 54 per cent of the time for women.

When the algorithm was shown five images of a single person, the accuracy increased to 91 and 83 per cent for gay men and women respectively.

Written all over your face

Sexual orientation was determined using fixed features, such as nose shape, and transient features such as grooming style.

Fixed features were based on the 'prenatal hormone theory', which essentially asserts that facial appearance and sexual orientation are linked.

They suggested that the study found 'strong support' for the theory that sexuality is determined by hormone exposure before birth.

Kosinski wrote about repercussions of their findings:

...companies and governments are increasingly using computer vision algorithms to detect people’s intimate traits, our findings expose a threat to the privacy and safety of gay men and women.

It is thus critical to inform policymakers, technology companies and, most importantly, the gay community, of how accurate face-based predictions might be.

LGBT+ groups slam the paper

The study has limitations - for instance it only seems to work on white people.

It suggested that gay men had lighter skin than straight men, possibly due to testosterone (which is linked to melanin production).

Bisexuals were excluded, and according to LGBTQ Nation, persons whom the researchers decided 'did not look like the gender in their profiles' were also taken out of the study.

In a statement on their website, Glaad and the Human Rights Campaign (HRC) condemned the way in which the study had been presented.

Glaad's chief digital officer Jim Halloran wrote:

Technology cannot identify someone’s sexual orientation.

What their technology can recognise is a pattern that found a small subset of out white gay and lesbian people on dating sites who look similar.

Those two findings should not be conflated.

He continued:

This research isn’t science or news, but it’s a description of beauty standards on dating sites that ignores huge segments of the LGBTQ community, including people of color, transgender people, older individuals, and other LGBTQ people who don’t want to post photos on dating sites.

The two groups made the following claims about the Stanford Study:

- It only looked at white people.

- Crucial information such as sexual orientation and age were taken at face value, and were not independently verified.

- It assumed no difference between sexual orientation and sexual activity.

- Any sexual orientation other than homosexual or heterosexual was excluded from consideration.

- The study only looked at gay men and women who are open about their sexuality, are white, of a certain age (18-40), and are on dating sites.

- Although 81 per cent of gay men can be identified correctly, heterosexual men could only be identified less than 20 per cent of the time.

- The research states: "Outside the lab, the accuracy rate would be much lower" (the lab = certain dating sites) and is "10 points less accurate for women'."

- Superficial characteristics such as clothing style, weight, and facial expressions were used.

According to Glaad and the HRC, the two organisations spoke with Stanford University in months prior to the publication of Kosinski and Wang's research.

The HRC and Glaad reportedly raised concerns about the study and the potential that its findings would by overinflated.

The two groups concluded:

Based on this information, media headlines that claim AI can tell if someone is gay by looking one photo of your face are factually inaccurate.

Nate Wright pointed out the hype that these kind of sensationalist Artifical Intelligence stories can generate.

In a thread on Twitter, Wright explained problems with reporting the story along the lines of 'this machine knows you are gay'.

In particular, the results showed an AI was correct at spotting homosexual men 4/5 times, as opposed to 3/5 by humans.

In the author's notes of Kosinski's paper, he acknowledged the possibility the study could make matters worse for the LGBT+ community:

We were really disturbed by these results and spent much time considering whether they should be made public at all.

We did not want to enable the very risks that we are warning against.

In March 2017 Kosinski addressed the CeBit Global Conference, in a lecture titled The End of Privacy.

Speaking to the Guardian, Kosinski said:

One of my obligations as a scientist is that if I know something that can potentially protect people from falling prey to such risks, I should publish it.

Rejecting the results because you don’t agree with them on an ideological level … you might be harming the very people that you care about.

He also highlighted the fact that corporations and governments are already using face recognition technology, and studying its efficacy was therefore useful.

I hope that someone will go and fail to replicate this study … I would be the happiest person in the world if I was wrong.

HT LGBT Networks, The Guardian

Top 100

The Conversation (0)