Science & Tech

Evan Bartlett

Oct 26, 2015

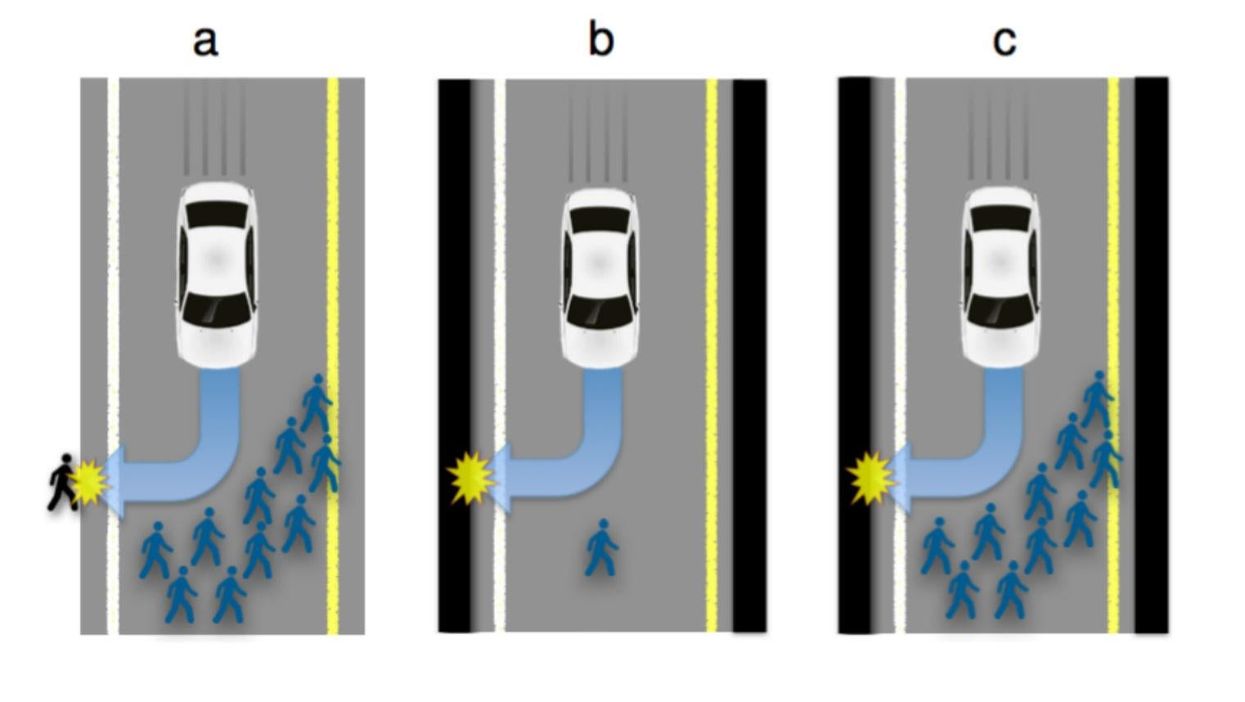

(Graphic: Cornell University

"It's too dangerous. You can't have a person driving a two-ton death machine," Elon Musk, one of the pioneers of the driverless car, said back in March.

At some point in the future, technology will eventually become so advanced, and so safe, that some experts predict you may not even be able to get insurance for a manually-driven car.

While driverless technology continues to advance, one thing that Musk and other technologists haven't yet been able to solve is an ethical dilemma that means humans might have to programme robots to kill in the case of an unavoidable accident.

The Trolley Problem

There is a runaway trolley barrelling down the railway tracks. Ahead, on the tracks, there are five people tied up and unable to move. The trolley is headed straight for them. You are standing some distance off in the train yard, next to a lever. If you pull this lever, the trolley will switch to a different set of tracks. However, you notice that there is one person on the side track. You have two options: 1. Do nothing, and the trolley kills the five people on the main track or 2. Pull the lever, diverting the trolley onto the side track where it will kill one person. Which is the correct choice?

- via the Independent

Imagine in the future that you are deciding whether or not to buy a self-driving car, but the self-driving car is programmed to sacrifice your own life if it means saving 10 others.

While that would represent the lesser of two evils for society as a whole (the utilitarian approach), those from the deontological school of thought would argue that the car should not be programmed to make the decision to "actively" kill the driver and should instead let it plough into the larger group as nature intended.

But then manually-driven cars, or those not programmed to avoid higher numbers of fatalities, are likely to result in more accidents. It is, as Technology Review points out, a catch-22 situation.

While the Trolley Problem has been around for a long time, a group of researchers in the US has been trying to find a solution for carmakers that is rooted in public opinion.

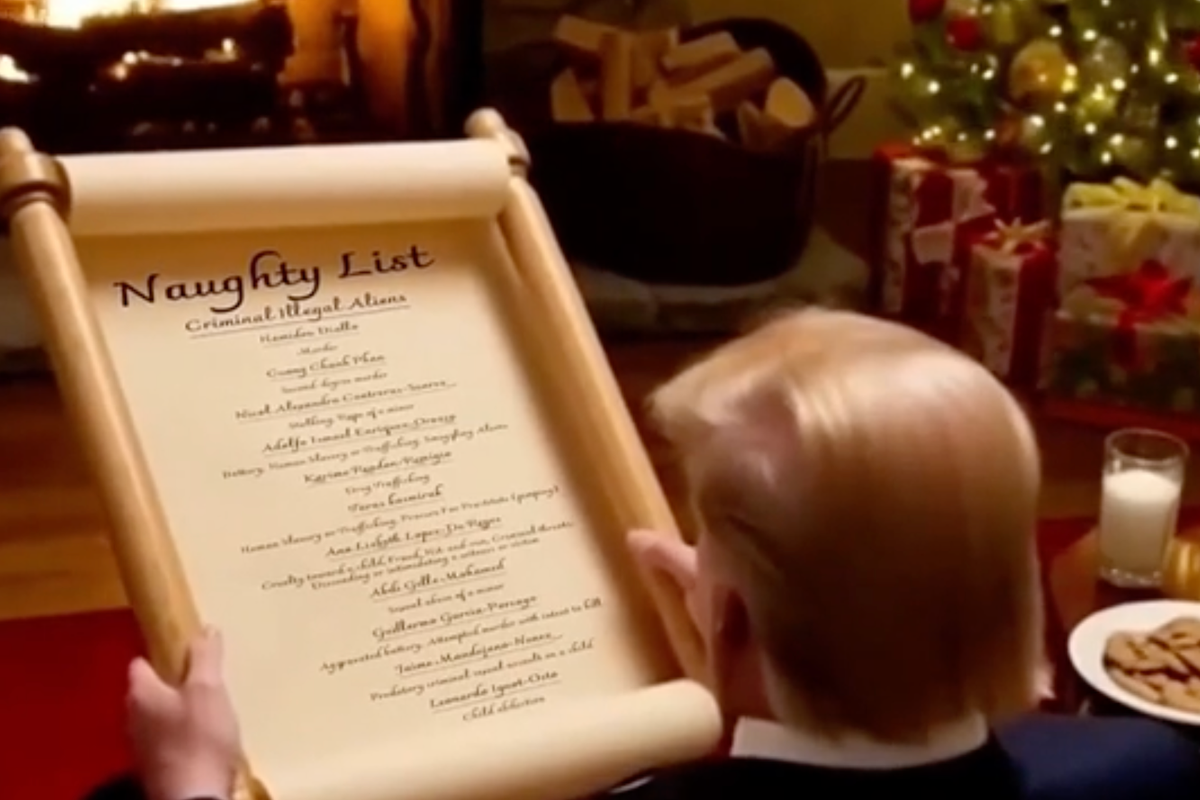

Jean-François Bonnefon and his team at Cornell University have been testing members of the public on various different scenarios - they figure that people are more likely to accept a scenario that is in line with their own views.

The team have presented three different scenarios.

Imagine you are in the self-driving car. What would you do in the following situations?

Their findings so far have concluded that most people generally want everyone to drive around in "utilitarian-programmed" cars... unless it is them doing the driving.

Let us know what you think about the dilemma in the comments below.

More: The countries with the best and worst drivers in the world

Top 100

The Conversation (0)