Science & Tech

Sinead Butler

Jan 05, 2026

French authorities probe X chatbot Grok over AI-generated nude images

FMM - F24 Video Clips / VideoElephant

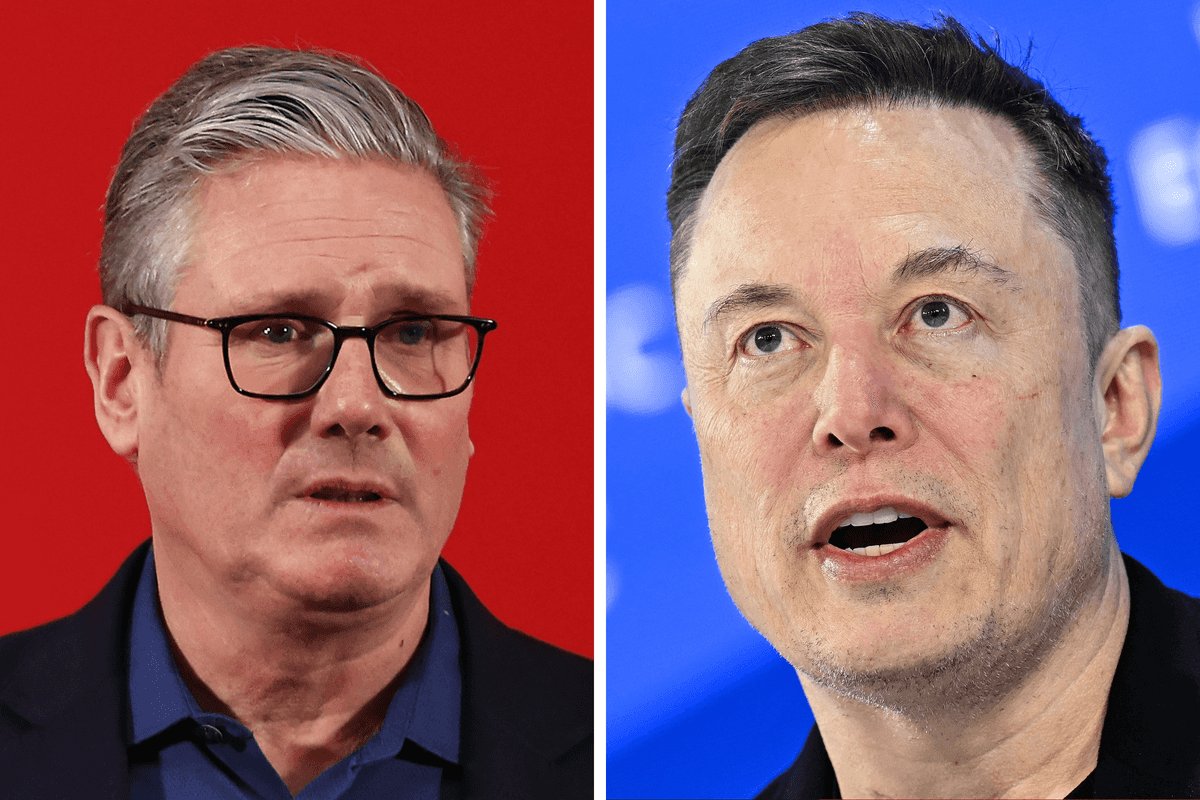

Three countries - France, India, and now Malaysia - are threatening to take legal action against Elon Musk's Grok due to the AI chatbot creating sexualised images of women and children on the social media platform X.

In recent days, Grok has been digitally stripping women and children to bikinis when users give this prompt request, and as a result, other users have complained about the tool.

Although it was last month when abuse complaints initially emerged, following the 'edit image' button update that enables users to modify images on the social media networking site.

Upon this rollout, it has prompted concern from various countries - here's what each had to say.

Malaysia

At the weekend, Malaysian authorities became the latest country to take a stand and confirmed they have launched an investigation after complaints that Grok was being used to manipulate images of women and minors for indecent and harmful purposes.

Additionally, the New Straits Times reported that Grok was also editing images of Malaysian women by removing their headscarves.

The authorities added that X users who allegedly violated the law will be investigated, and they will also summon the company's representatives.

“While X is not presently a licensed service provider, it has the duty to prevent dissemination of harmful content on its platform," said the Communications and Multimedia Commission, which has issued a warning to those creating or distributing the harmful content, which is an offence under Malaysian law.

India

Meanwhile, India has instructed X to submit a report within 72 hours to the electronics and information technology ministry regarding the corrective action being taken and warned that failure to comply could see action under criminal and IT laws.

They have also requested for a comprehensive review of the AI chatbot to ensure it did not generate content containing “nudity, sexualisation, sexually explicit or otherwise unlawful content".

Plus, stricter regulation of social media platforms over inappropriate AI-generated content could also be considered by the government.

France

France believes Grok-created images potentially violated the EU’s Digital Services Act, and accused the chabot of generating “clearly illegal” sexual content on X without people’s consent.

An investigation into X by the public prosecutor's office in Paris will now also include these latest accusations that users were using the Grok to generate and distribute harmful content regarding children.

It has initially launched back in July, over reports platform’s algorithm was being manipulated to enable foreign interference.

Dani Pinter, chief legal officer and director of the Law Center for the National Center on Sexual Exploitation, said X had failed to pull abusive images from its AI training material.

“This was an entirely predictable and avoidable atrocity,” Pinter told Reuters.

What has Elon Musk said about this?

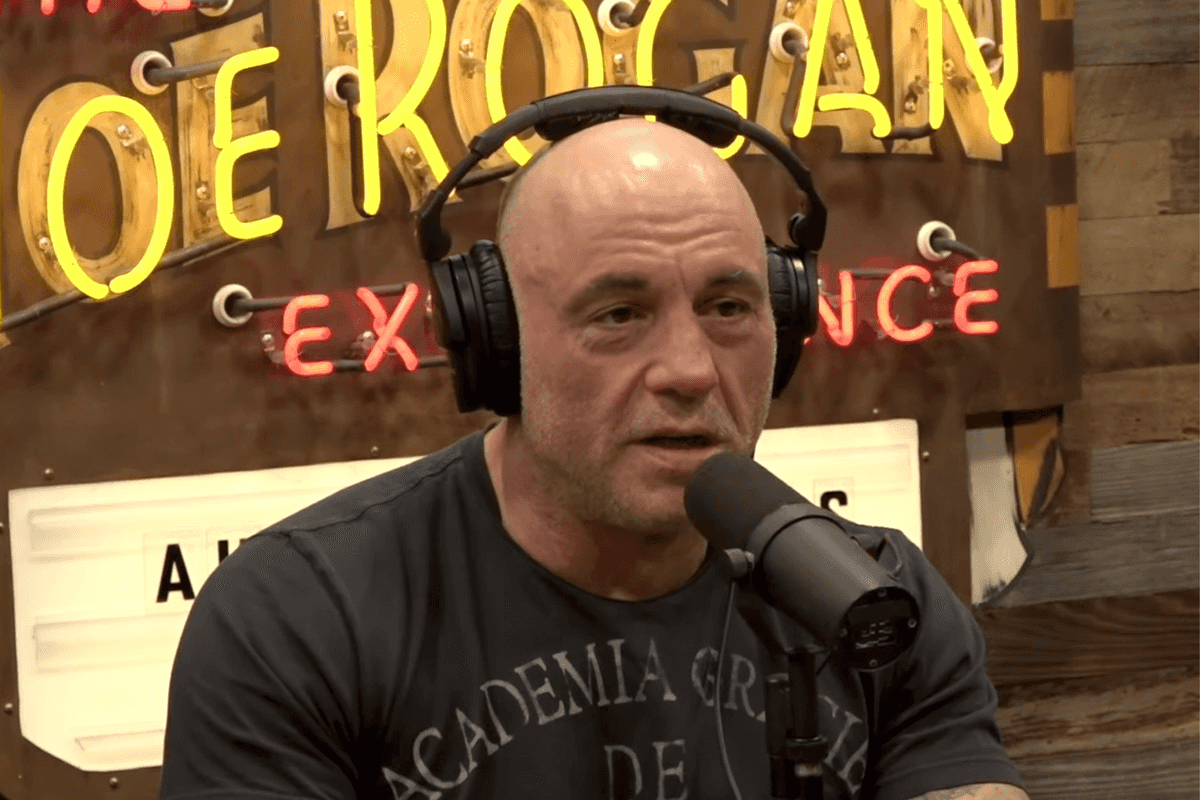

Initially, Musk was responding to AI edits of famous people, including himself, in bikinis with crying laughing emojis on Friday.

He had a similar response of crying laughing emojis to an X user who described how their timeline feed resembled a bar packed with bikini-clad women

But as the criticism has spread, Musk took to X to share that his platform will be taking action against this illegal and harmful content by removing it and permanently suspending accounts.

“Anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content,” he said in a post.

Elsewhere from Indy100, ChatGPT is roasting people over their 'wildest questions of 2025', and People call Musk’s Grok content the ‘saddest thing’ they’ve ever seen.

How to join the indy100's free WhatsApp channel

Sign up to our free indy100 weekly newsletter

Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

Top 100

The Conversation (0)