Iana Murray

Sep 21, 2020

Getty

Last week, Twitter users held an experiment and it went about as disastrously as you could imagine.

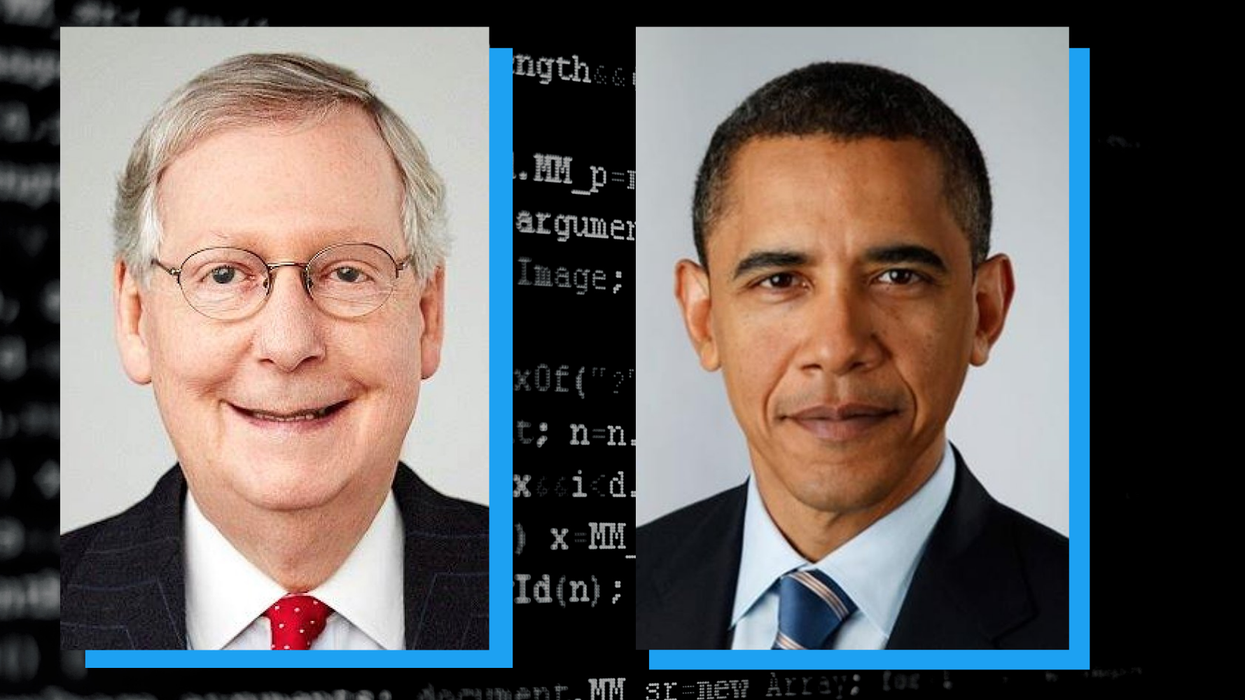

Entrepreneur Tony Arcieri posted two photos, one with former president Barack Obama’s headshot on top of senator Mitch McConnell’s and vice versa. He captioned the images: “Which will the Twitter algorithm pick? Mitch McConnell or Barack Obama?” In both photos, Twitter’s image preview feature picked McConnell.

To many, it was an obvious example of Twitter’s algorithm preferring white faces over Black. You could play devil’s advocate and argue that maybe McConnell is just more relevant right now, but similar experiments suggested that’s not the case.

For example, the same thing happens with stock photos...

And even The Simpsons characters.

It's not just race either. When given the option, the algorithm also appears to prefer men over women.

After several tweets exposing the platform’s apparent inherent bias went viral, Twitter apologised and promised to “share what we learn”:

Our team did test for bias before shipping the model and did not find evidence of racial or gender bias in our testing.

But it’s clear from these examples that we’ve got more analysis to do. We’ll continue to share what we learn, what actions we take, and will open source our analysis so others can review and replicate.

But Twitter isn’t the first tech company to be accused of having a racist algorithm.

Just last month, the Home Office suspended its algorithm for deciding visa applications to "streamline the process", after allegations that it contained “entrenched racism”.

The algorithm allegedly made judgements on visa applicants based on categories including nationality, and visas were more likely to be denied if the applicant fell under “secret list of suspect nationalities”.

The Home Office denied the characterisation, but the Joint Council for the Welfare of Immigrants (JCWI) and digital rights group Foxglove have launched a legal challenge against the system.

And in 2015, it was revealed that Google’s image algorithm would repeatedly label photos of Black people as gorillas, chimpanzees or monkeys. The company’s solution? Remove all primate labels altogether instead of… you know, teaching its algorithm to not be racist.

In fact, there are a disturbing number of examples that demonstrate the racism embedded in algorithms.

There are the healthcare algorithms that discriminate against Black people in the US, and a racist predictive policing system that, again, routinely targets Black people. It was also recently reported that Facebook and Instagram are investigating their own algorithms after accusations of racism an bias.

How do algorithms become racist?

In the simplest terms, algorithms can be taught bias through two major avenues: the data given to the algorithm and the inherent biases of the engineers themselves.

Algorithms are “trained” by becoming exposed to data. For example, the data might teach an algorithm what a door looks like: it has a rectangular shape, a door knob and it hinges open. But this becomes more complicated when applied to more complicated, less straightforward data, such as data on people. Algorithms are at the mercy of data given to them by people, and if the data about a certain demographic isn’t entirely accurate, the algorithm will learn and develop biases.

In one study, it was discovered that if an artificial intelligence was tasked with reading the entire internet, it would become sexist and racist because it would read comments made by people.

Who builds and signs off on these algorithms is also important. Even technology is fallible at the hands of people with their own inherent biases. The tech industry is overwhelmingly white and male, and so, those are the people that decide on the ethics of artificial intelligence. It's not until everyone else uses these algorithms that problems arise.

We like to think of computers as detached and objective, but in reality, algorithms perpetuate prejudice even further.

Though incidents like these happen again and again, Twitter’s discriminatory algorithm also likely won’t be the last. Institutional racism runs deep in tech, and with more and more tech companies being exposed for their suspicious practices, these problems aren’t going away any time soon.

Top 100

The Conversation (0)