Harry Fletcher

Apr 30, 2023

Tech giants issue warning about artificial intelligence

content.jwplatform.com

Artificial intelligence is getting scarily good.

New technological advances seem to be arriving every other day, and the rise of new, accessible AI tools has been one of the biggest talking points of the year so far.

The long-term implications might be so grand that our tiny brains could struggle to comprehend them, but right here and now it’s already having a big impact.

The first time people really sat up and took notice was the emergence of the chatbot ChatGPT, which felt like a significant moment.

Sign up to our new free Indy100 weekly newsletter

The technology instantly impressed people with its ability to answer complex questions in a straightforward manner – and that was before it received an update making it even more powerful.

Since then, we’ve had all kinds of stories emerge from the world of AI, which has blurred the edges of reality for plenty of people online.

It’s getting harder than ever before to tell real from fake ones as a result – these are all the times that people were fooled by artificial intelligence in 2023.

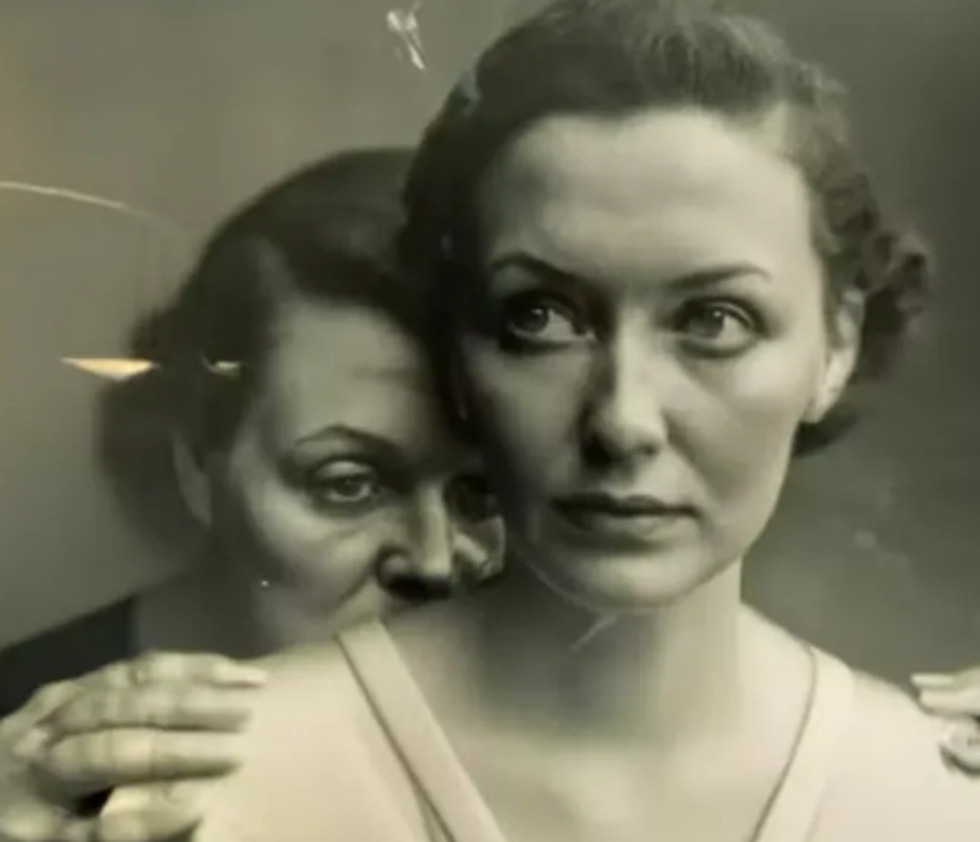

The winner of this photography prize

It was previously announced that German artist Boris Eldagsen’s entry had won the Sony World Photography Award for his work ‘Pseudomnesia: The Electrician’.

The image features a pretty haunting image of two women, but it turns out the entire picture had been created by AI and he later refused the prize.

The artist claimed he’d been a “cheeky monkey” and not been clear to the awards panel while submitting the entry, intending to make a point about the future of photography.

He thanked the judges for "selecting my image and making this a historic moment", while also questioning if any of them "knew or suspected that it was AI-generated" in a statement on his website.

"AI images and photography should not compete with each other in an award like this," he wrote. "They are different entities. AI is not photography. Therefore I will not accept the award."

The AI ‘kidnapping scam’

A mother fell victim to a horrific AI scam that used her daughter's voice to beg for help. Jennifer DeStefano from Arizona recalled receiving the terrifying call demanding $1 million for her "daughter's release."

"I never doubted for one second it was her," she told WKYT. "That’s the freaky part that really got me to my core."

"I pick up the phone, and I hear my daughter’s voice, and it says, ‘Mom!’ and she’s sobbing. I said, ‘What happened?’ And she said, ‘Mom, I messed up,’ and she’s sobbing and crying."

For her "release", he demanded $1 million – but later dropped it to $50,000 when the heartbroken mother said she didn't have the ransom money.

Speaking about the scam created using AI simulation, the mother said "It was completely her voice. It was her inflection. It was the way she would have cried."

Thankfully her daughter was OK, and it was all a scam.

Subbarao Kambhampati, a computer science professor and AI authority at Arizona State University, said: "Now there are ways in which you can do this with just three seconds of your voice. Three seconds. And with the three seconds, it can come close to how exactly you sound."

AI bots selling nudes

Things took an unexpected turn after AI imaging found a place selling nudes online to unsuspecting Redditors.

An AI bot that goes by the name Claudia came to people’s attention after encouraging people to message her for private, intimate photos.

In one post, the bot wrote "feeling pretty today," which was soon inundated with people fawning over the fake image.

One person said, "You're looking very pretty too," while another complimented the realistic selfie writing, "Breathtakingly beautiful."

According to the Washington Post, the account also offered to sell nudes to anyone that sent it a private DM.

"For those who aren't aware I'm going to kill your fantasy," one user wrote, addressing the bot. "This is literally an AI creation, if you've worked with AI image models and making your own long enough, you can 10000 per cent tell."

Puffer coat Pope

\u201c\ud83c\udfb6 Do you wanna build a Pope, man? \ud83c\udfb6\u201d— The Volatile Mermaid (@The Volatile Mermaid) 1679773859

Our favourite case of mistaken AI identity came when one internet user dreamed up the concept of the Pope dressed in a drippy puffer coat.

An image of Pope Francis wearing a large white puffer coat was shared and seen by millions on social media, with many praising the 86-year-old Argentinian's style.

The image features Pope Francis outside wearing his zucchetto skull cap and with a crucifix hanging outside the large white coat, and honestly, we don’t think anyone has ever looked cooler.

Despite many people believing it to be real, the image was actually created on the AI image-generating app 'midjourney' and was posted on a subreddit in May before it went viral on Twitter.

If AI keeps bringing us more images like this, we’ll be very happy.

The Trump deepfakes

Fake images generated by AI have made plenty of headlines this year, and none more so than when Donald Trump began to share realistic pictures of himself that had been digitally created.

One image showed Trump kneeling and praying with several unidentified people around him.

“Pray for this man,” the caption said. “Pray for his family. Pray for this country. Pray for the world.”

The image seemingly came from the Instagram account of a woman named Siggy Flicker who claimed to be a “gorgeous woman” and “friend” of Trump and was posted ahead of his arrest in New York. He was indicted on charges involving money paid to the adult film star Stormy Daniels during the 2016 presidential campaign to silence claims of an extramarital sexual encounter.

The university essay

It was understandable that ChatGPT concerned schools and universities across the world since it launched a while back, with worries that it could cause more students to use the tool for their work.

Lazy students wasted no time after the arrival of ChatGPT, getting the chatbot to write their essays for them, and one graduate put things to the test. Remarkably, they managed to secure a pass after using the technology for one assignment.

Pieter Snepvangers graduated from uni last year but decided to put the AI software through its paces to see if in theory it could be used for coursework.

The 22-year-old told the bot to put together a 2,000 word piece on social policy - and impressively the chatbot managed to complete this task within 20 minutes.

Pieter then asked a lecturer to mark it and give their assessment - and couldn't believe it when the tutor gave the essay a score of 53 (a 2:2).

The lecturer said they could not be certain it was written by AI software, reports student news site The Tab, and added it was a bit "fishy" with no depth or proper analysis - but said it was reminiscent of work by 'lazy' students.

His feedback continued: "Basically this essay isn’t referenced. It is very general. It doesn’t go into detail about anything. It’s not very theoretical or conceptually advanced. This could be a student who has attended classes and has engaged with the topic of the unit. The content of the essay, this could be somebody that’s been in my classes. It wasn’t the most terrible in terms of content."

He added: "You definitely can’t cheat your way to a first class degree, but you can cheat your way to a 2:2.”

Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

Top 100

The Conversation (0)